Understanding and Coding Self-Attention, Multi-Head Attention, Cross-Attention

Part one

This article will teach you about self-attention mechanisms used in transformer architectures and large language models (LLMs) such as GPT-4 and Llama. Self-attention and related mechanisms are core components of LLMs, making them a useful topic to understand when working with these models.

Introducing Self-Attention

Since its introduction via the original transformer paper (Attention Is All You Need), self-attention has become a cornerstone of many state-of-the-art deep learning models, particularly in the field of Natural Language Processing (NLP). Since self-attention is now everywhere, it's important to understand how it works.

The concept of "attention" in deep learning originated from efforts to enhance Recurrent Neural Networks (RNNs) in managing longer sequences or sentences. For example, when translating a sentence from one language to another, translating word-by-word often fails to capture the intricate grammatical structures and idiomatic expressions unique to each language, resulting in inaccurate or incoherent translations.

To overcome this issue, attention mechanisms were introduced to give access to all sequence elements at each time step. The key is to be selective and determine which words are most important in a specific context. In 2017, the transformer architecture introduced a standalone self-attention mechanism, eliminating the need for RNNs altogether.

Self-attention can be understood as a mechanism that enriches an input embedding by incorporating contextual information from the entire input sequence. Essentially, it allows the model to assess the significance of various elements within the sequence and dynamically adjust their contributions to the output. This is particularly valuable in language processing tasks, where the meaning of a word often depends on its context within a sentence or document.

While there are numerous variations of self-attention, much of the research has focused on improving its efficiency. Nonetheless, most implementations still rely on the original scaled-dot product attention mechanism introduced in the Attention Is All You Need paper, as self-attention rarely poses a computational bottleneck for companies training large-scale transformer models.

In this article, we concentrate on the original scaled-dot product attention mechanism, commonly known as self-attention, which continues to be the most popular and widely used approach in practice. For those interested in exploring other attention mechanisms, consider reviewing the 2020 Efficient Transformers: A Survey, the 2023 A Survey on Efficient Training of Transformers, and the latest papers on FlashAttention and FlashAttention-v2.

Embedding an Input Sentence

To get started, let's take the input sentence "Life is short, eat dessert first" and examine how it passes through the self-attention mechanism. As with other text modeling approaches (e.g., recurrent neural networks or convolutional neural networks), the first step involves creating an embedding for the sentence.

For simplicity, we'll use a limited dictionary dc that contains only the words from the input sentence. In practical applications, however, the vocabulary would encompass all the words from the training dataset, typically ranging between 30,000 and 50,000 entries.

Next, we use this dictionary to assign an integer index to each word:

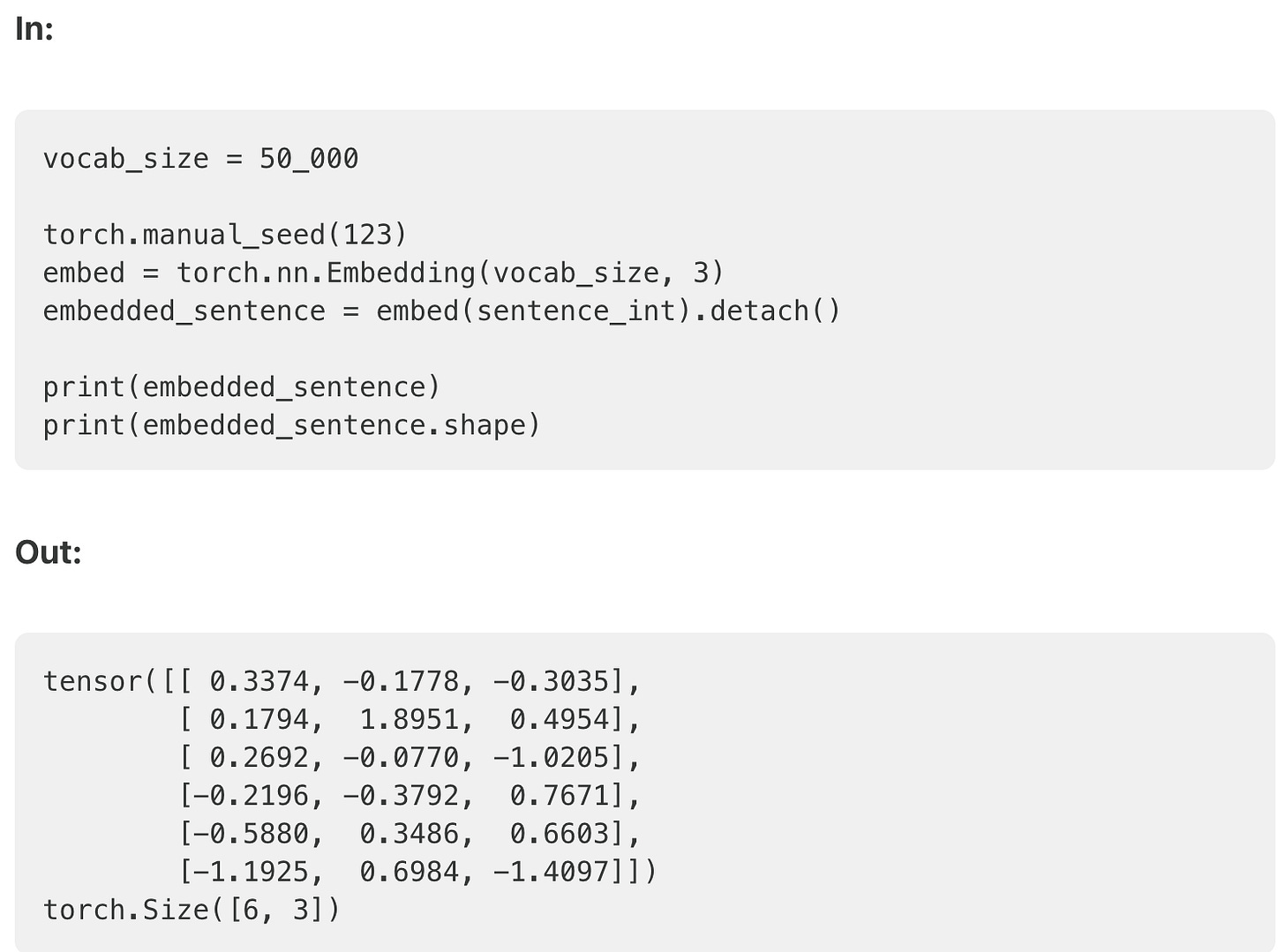

Now, using the integer-vector representation of the input sentence, we can use an embedding layer to encode the inputs into a real-vector embedding. Here, we will use a tiny 3-dimensional embedding such that each input word is represented by a 3-dimensional vector.

Note that embedding sizes typically range from hundreds to thousands of dimensions. For instance, Llama 2 utilizes embedding sizes of 4,096. The reason we use 3-dimensional embeddings here is purely for illustration purposes. This allows us to examine the individual vectors without filling the entire page with numbers.

Since the sentence consists of 6 words, this will result in a 6×3-dimensional embedding:

I will continue with the next section of this write, I need to keep it brief in order not to waste so much time, watch this space for the continuation of transformer attention coding, thank you for reading and learning.

This writeup made references from this book Build a Large Language Model (From Scratch) book